Emotion Role Labeling and `\par`{=latex} Stimulus Detection`\footnote{Published as blog post at \url{https://www.romanklinger.de/blog/2023-04-24-roles/}}`{=latex}

Emotion analysis and the Need for Structured Predictions

Emotion analysis, as I described it in my previous blog post which focused on appraisal theories, typically focuses on predictions based on a predefined textual unit. This could be a sentence, a Tweet, or a paragraph. For instance, given the text

I am very happy to be able to meet Donald Trump.

one could task an automatic emotion analysis system to output:

- the emotion that the person writing the text felt at writing time or wants to express (author emotion),

- the emotion that a person might develop based on the text (reader emotion),

- the emotion that is explicitly mentioned in the text (text level emotion).

For (1), it’s pretty clear that the author of the text “I” feels joy. The text expresses that quite clearly, which also fits (3). To understand what emotion the reader might feel (2) depends on various aspects. If they like the author and Donald Trump, for instance, there might be some increased chance that sharing the joy of the author is more likely than a negative emotion. I also think that (3) is not really a task - it’s more an underspecified setup, in which the decision whose emotion is to be detected is left open.

There has been some work on the question of perspective - the reader vs. the writer [@Buechel2017]. What we cannot do, however, with such text classification approach is to extract the emotion of other mentioned entities, here, “Donald Trump”. The difference between the tasks is that there is always an author and a reader, but the entities are flexible parts of the text. To assign an emotion to an entity, we first need to know which entities in the text are mentioned.

If text classification is sufficient depends on the actual task. For social media analysis, extracting the emotion of authors of full tweets makes a lot of sense. For literature, the author’s emotion obviously is not that relevant, similarly for the journalist’s emotion when writing a news headline. For some domains, it is much more intuitive to look at the emotions of entities that are mentioned. Given, for instance, the following news headline (from @Bostan2020)

A couple infuriated officials by landing

their helicopter in the middle of a nature reserve.

the question which emotion the author felt is probably irrelevant – it’s a journalist, they don’t feel anything about it, and if they do, it probably doesn’t matter. We might be interested to understand what emotion is caused in a reader, for instance to improve recommendation systems (to only read good news; or to find headlines which are suitable for clickbait). Still, arguably, in such tasks the emotions that are felt by interacting entities are more relevant for analysis of news. Here, we would like to know that “officials” are described to be angry. We could also try to infer an emotion of “A couple” - perhaps they were pretty happy (anyway, they have a helicopter).

Coming back to the example of “The sorrows of the young Werther” that have been mentioned earlier, finding out which emotions are ascribed to entities in a novel clearly requires more than just text-level classification, to not just be a straight oversimplification.

Finally, we might also want to know which event (object, or other person) is described to cause the emotion. Being able to do that would allow us to automatically extract social network representations [@Barth2018] and understand which stimuli are often described to cause a specific emotion.

Structured Sentiment Analysis

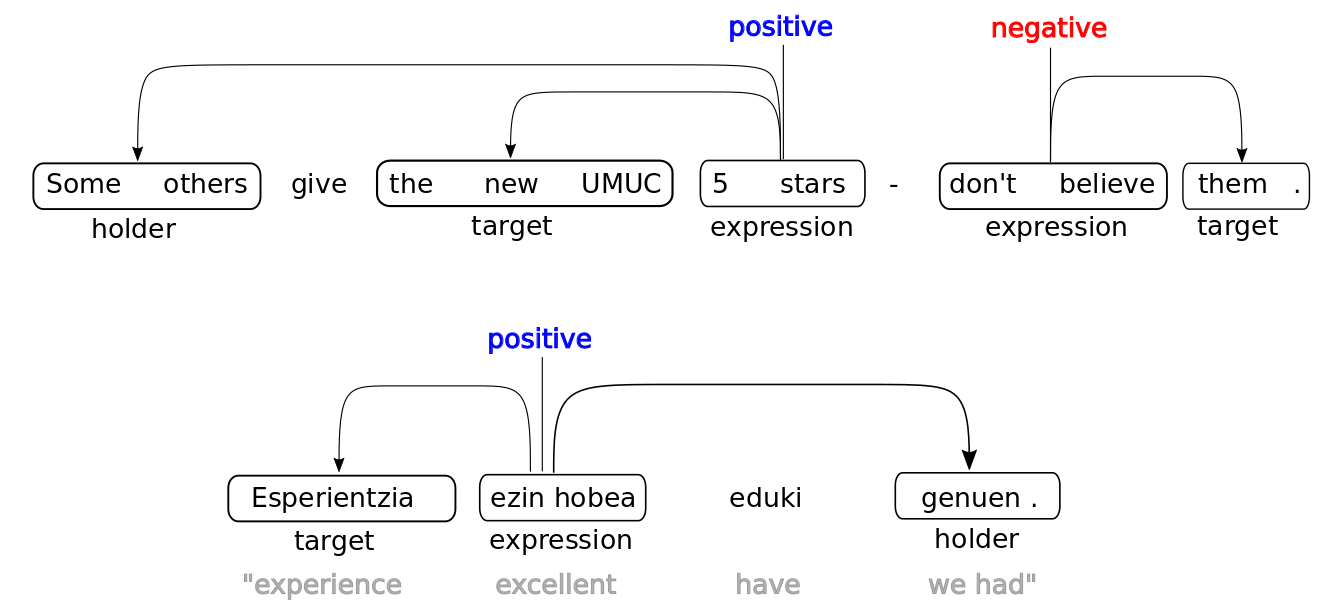

This is all pretty related to another more popular task that you might have heard of: aspect-based sentiment analysis. Trying to understand not only if a text is positive, but what aspect is described to be positive, who the opinion holder is, and which words express this opinion. This is now an established task in sentiment analysis. As a recent example, the SemEval Shared Task on Structured Sentiment Analysis [@Barnes2022] aimed at detecting parts of the text corresponding to the opinion holder, the expression, and the target, as the organizers illustrate in Figure \ref{structuredsentiment}.

The setup of structured sentiment analysis or aspect-based sentiment analysis is older and more established than structured emotion analysis. However, transfering sentiment analysis to emotion analysis is not entirely straight-forward. One reason is that tasks do not perfectly align:

- Detecting an opinion holder in sentiment analysis totally makes sense. Such thing like an “emotion holder” does, however not really exist. It would be the person experiencing or feeling an emotion, to whom we could refer as the emoter (we could also say feeler, but that word is more ambiguous). This also shows one difference between emotion analysis and sentiment analysis in the sense of opinion analysis - expressing an emotion is often not a voluntary process (sometimes not even conscious), while this is more often the case for an opinion. Also, opinions could develop more out of a conscious cognitive process.

- The aspect/target in sentiment analysis might correspond to two things in emotion analysis. It can be a target, I can be angry at something or someone, that is not necessarily the cause of that emotion. I can be angry at a friend, because she did eat my emergency supply of chocolate. But I cannot be sad at somebody. In emotion analysis, we care more about the stimulus or cause of an emotion.

- The evaluative phrase in sentiment analysis (something is good or bad) pretty clearly corresponds to emotion words (something makes someone sad or happy).

To understand the relations between these tasks, we conducted the project SEAT (Structured Multi-Domain Emotion Analysis from Text) between 2017 and 2021, to which CEAT is the successor (which started in 2021).

Data Sets and Methods for Full Structured Emotion Analysis

There are now a couple of data sets available to develop systems that detect emoters and causes. Recently, the project SRL4E aggregated several of them into a common format [@Campagnano2022], including the ones by @gao_overview_2017, @liew-etal-2016-emotweet, @Mohammad2014, and @aman-szpakowicz-2007-identifying. I will focus in this blog post on our own work, namely @Kim2018, @Kim2019, and @Bostan2020. Not part of SRL4E is x-enVENT [@troiano-EtAl:2022:LREC], because it has been published more recently, but we will also talk about this.

The two corpora by @Kim2018 and @Bostan2020 aimed at developing resources that enable the development of models that recognize emotion labels for all potential emoters mentioned in the text and the relations between them (that one entity is part of a target or a cause):

There are two main differences in these data:

- Annotation Procedure (crowdsourcing vs. carefully trained annotators)

- Domain (Literature vs. News headlines)

The REMAN corpus

When we started with the annotation of the REMAN corpus, we were involved in the CRETA Project, a platform that combined multiple projects from the digital humanities. There was some focus on literary studies, and therefore we decided to annotate literature. We chose Project Gutenberg, because of its relative diversity and accessability. However, literature comes with challenges - it’s not exactly written to communicate facts concisely and clearly. Emotion causes and the associated roles can be distributed across longer text passages, and we expected the annotation to be difficult, because of the artistic style. This lead to some decisions:

- Each data instance consists of three sentences, in which the one in the middle is selected to contain the emotion expression. The sentences around would only be annotated for roles.

- We performed the annotation with students from our institute with whom we could interactively discuss the task (before fixing the annotation guidelines and letting them annotate independently).

These decisions lead to quite some ok inter-annotator agreement, but was still clearly below tasks that are more factual. Particularly detecting the cause spans was challenging. We attributed this to the subjective nature of emotions and the domain being quite challenging.

The GoodNewsEveryone corpus

For the GoodNewsEveryone corpus, we decided therefore to move to crowdsourcing, to be able to retrieve multiple subjective annotations which were then aggregated. Emotion role labeling is a structured task, and this required a multi-step annotation procedure. To not make the data more difficult than necessary, we chose a domain that is characterized by short instances: news headlines. This came, however, with another set of difficulties, namely the missing context. We did not anticipate that it would be so hard for annotators to interpret specific events. That was particularly the case when annotators from the US were tasked to annotate UK headlines (or the other way around).

Simplifying Role Labeling to Stimulus Detection or Entity-Specific Predictions

To our knowledge, up until today, there is no work on fully extracting emotion role graphs automatically. The most popular subtask is arguably emotion cause/stimulus detection, in which the part of the text is to be detected that describes what caused an emotion. In Mandarin, it is common to formulate the task as clause classification. It seems that in English, stimuli are often described with non-consecutive text passages or cannot be mapped clearly to clauses. Therefore, in English, it is more common to detect emotion stimuli on the token level [@Oberlaender2020]. We also worked on stimulus detection quite a bit, as part of the corpus papers mentioned above, and additionally in German [@DoanDang2021]. We also wanted to understand if knowledge about the roles can improve emotion classification [@Oberlaender2020b] (yes), and how emotions are actually ascribed to a character in literature [@kim-klinger-2019-analysis] (depends on the emotion category).

We decided to additionally follow another research direction. While, clearly, the emotion stimulus plays an important role as the trigger to the affective sensation, there is no emotion without the person experiencing it. If we believe that emotions help us in surviving in a social world, we also need to put the entity that feels something on the spot. Our first attempt was @Kim2019, in which we annotated the data based on entity relations.

We left it to the automatic model to figure out which parts of the text are important to decide which emotion somebody feels and took the stance that the relation between characters is important to be analyzed. We did that with a pipeline model, which detects entities, assigns emotions, and aggregates them in a graph.

This work follows the motivation to analyze social networks most directly, because in the evaluation of the model, we evaluated on the network level - it was fine to miss some entity relation, as long as we find it somewhere in the text.

The second and more recent paper acted as an aggregating element between our work of appraisal-based emotion analysis and emotion role labeling [@troiano-EtAl:2022:LREC]. We went back to in-house annotations based on trained experts, because we wanted to acquire entity-specific emotion and appraisal annotations which we needed to first develop together with annotators. Therefore, this paper also acted as a preliminary study to @Troiano2023 which we already discussed in a previous blog post. Here, we reannotated a corpus of event reports (based on @troiano-etal-2019-crowdsourcing and @hofmann-etal-2020-appraisal), but only from the perspective of the author (the person who lived through the event and told us about that), but in addition from the perspective of every person participating in the event.

We left the relation between entities underspecified, but in the analysis of the data, that can be quite clearly observed, on the emotion and the appraisal-level. For instance, when one person is annotated to feel responsible, that decreases the probability that the other person is also responsible. As self/other-responsibility is an appraisal dimension known to be relevant for the development of guilt, shame, and pride, this also influences the emotion. We also did perform automatic modeling experiments, which very clearly showed that simple text classification does not entirely capture the emotional content of text - it conflates multiple emotional dimensions into one [@Wegge2022].

Summary

In this blog post, I summarized the work we performed on emotion role labeling which is a way to represent emotions described in text. In contrast to text classification, it is more powerful to accurately represent what’s in the text, but the modeling is also more challenging. Because of that, various subtasks have been defined, including experiencer specific emotion detection and stimulus detection; which both focus just on one role.

Why is this important? Most of what I wrote about is about resources, and only a bit about modeling and automatic systems. Before automatic systems can be developed, we need corpora, not only to train models, but also as a process to understand the phenomenon. I think that the emotion role labeling formalism is powerful enough to represent all relevant aspects of emotions as they are expressed in text, but it is challenging to create high-quality corpora. Further, it is challenging because sometimes, a simulus cannot be exactly located in text. Emotions do not develop just based on one single event that can be referred to with a name or a short text. That might be ok in news data, but in literature, an event can be described with many more words, perhaps stretching over pages or even a whole book.

What comes next? Some data sets exist now, and we have a good understanding of the challenges in annotation. For each subtask, there also exist various modeling approaches. We have also seen that emotion classification and role detection influence each other [@Oberlaender2020]. While emotion stimulus detection and emotion classification is very commonly addressed as a joint modeling task now in Mandarin [@xia-ding-2019-emotion], we do not yet have joint models that find all roles and emotion categories together. Such structured prediction models might not only provide a better understanding of what’s expressed in text than single predictions, the quality of models on each subtask might also improve, because the various variables interact. In my opinion, developing such models is still one of the most important tasks in emotion analysis from text. This will not only help to develop better performing natural language understanding systems. It can also contribute to develop a better understanding of the realization of emotions in text.

Acknowledgements

I would like to thank all my collaborators with whom I worked on role labeling and emotions. These are (in no specific order) Laura Oberländer, Evgeny Kim, Bao Minh Doan Dang, Kevin Reich, Max Wegge, Valentino Sabbatino, Amelie Heindl, Jeremy Barnes, Tornike Tsereteli, and Enrica Troiano.

This work has been funded by the German Research Council (DFG) in the project SEAT, KL 2869/1-1.