Conference Report: LREC-COLING 2024

End of May 2024, I participated in the Joint International Conference on Computational Linguistics, Language Resources and Evaluation (LREC-COLING) in Turin. Both COLING and LREC enrich the landscape of competitive conferences to publish in natural language processing and computational linguistics. While ACL, EMNLP, NAACL and EACL have a tendendency to aim at focusing on accepting high impact papers, also by keeping the acceptance rate low (~25%), both COLING and LREC are traditionally more inclusive. COLING and LREC recently had acceptance rates around 28% and 65%, respectively. While COLING also has been a bit higher in the past, these numbers are generally pretty typical for these venues.

The conferences LREC and COLING happened together this year, and the general chairs explained this to be a one time event to reschedule LREC to happen every even year and COLING every odd year, while both so far took place in even years. Joining these two conferences was interesting for authors who submitted, because it was not really clear what to expect. Also the organizers seem to have been surprised by the numbers of submitted papers.

Overall, there have been 3,471 submissions, with 1,554 acceptances. Out of those 275 were presented in talks, 837 as poster, and 442 remotely. Therefore, the acceptance rate was 44%. It’s difficult to say, but it might be that tracks that were more LREC-like had a higher acceptance rate and more COLING-style tracks had a lower one. I suspect that this is the case because the track with most acceptances is in the track “Corpora and Annotation”. LREC’s idea has always been to optimize for high recall here, given that resources may have an impact in low-resource languages without showing a high impact overall across the community. However, I’d like to note that LREC only started in 2020 to review its papers! Until 2018, extended abstracts were submitted and reviewed, and accepted abstracts were invited to submit a full paper, which did not get reviewed again. I am quite happy that this has been changed. The overall quality of papers, posters, and presentations has been comparable to other conferences, but before 2018, I’ve seen a couple of presentations at LREC where a review of the full paper might have had the chance to improve the quality of the work.

In the opening session, the program chairs also shared information on the countries from where most papers came (ranked list: China, USA, German, France, Japan, UK, Rep. of Korea, Spain, Italy, India). While China is, since a couple of years, having more and more papers in the NLP venues, I was a bit surprised to see quite many papers from Korea, which I think I did not before. Maybe the reason is that COLING 2022 was in Korea and made the conference more popular in this part of the world. It’s been quite interesting to also see many papers who worked on Korean. There were also some differences between countries in the acceptance rates, but I am not sure if these are just artefacts, so I don’t want to republish the overall highest numbers of acceptance rates (because the numbers of submissions were low in these places). The overall number of submissions is also roughly mirrored in the numbers of participants: China (472), USA (313), Germany (286), Italy (237), France (221), UK (143), Japan (141), Korea (91). I was also very happy to see that there were 89 scholars from Ukraine.

Overall, the conference felt very much like COLING and LREC together - one could clearly see the origin of this joint conference, and I liked this a lot.

As usual in our field, most papers were presented as posters, and LREC-COLING made no difference regarding the difference between the quality of orally presented papers and posters: there is none. Therefore, posters are often much more interactive than presentations, and its great to have discussions. I still like to go to presentations, particularly for topics where I am not an expert. For me, oral presentations are better to learn something new I don’t know a lot about. I don’t feel comfortable with asking a poster presenter for very basic introductions while they want to talk about their most recent research.

Tutorials

For the first time in my life, I’ve been asked to be a tutorial co-chair. I did act as a senior area chair a couple of times, but that’s a more guided process. I was very happy to do that together with Naoaki Okazaki who had experience already as a tutorial chair. Without him, I would not have been able to do this job, I learned a lot from him.

Due to his experience, nearly everything went very well with the tutorials, as far as I can say. We did select a good set of tutorials who attracted people from various areas. We received 20 submissions from which we selected 13 to be taught at the conference. Out of those three were introductory (one to an adjacent topic), and the majority presented cutting-edge topics. Unsurprisingly, a popular topic is large-language models, which are covered by multiple tutorials with varying perspectives on multimodality, evaluation, knowledge editing and control, hallucination, and bias. Other tutorials cover argument mining, semantic web, dialogue systems, semantic parsing, inclusion in NLP systems, and applications in chemistry. You can find the tutorial summaries at Klinger et al. (2024). I did attend two tutorials, one on knowledge editing and one on recognizing and mitigating hallucinations.

Only one thing did not go well: For one tutorial, the presenters did not come on site but presented entirely virtual; something that we did not intend. We believe that we communicated that, for each tutorial, at least one presenter needed to be on site. It’s currently not clear to me what the reason for his presumable misunderstanding is, but for future tutorials, I would suggest to ensure already at submission time to have people tick a box that they will come to the conference, if the proposal is accepted. Also, it might be a good idea to check with the local organizers if the presenters actually registered to the conference early enough.

Overall, if you participated in the tutorials as a teacher or participant, let me know if you have any feedback. LREC-COLING will compile a summary document to be handed over to the next organizing team and I would make sure to pass along any constructive feedback.

Own Contributions from Bamberg and Stuttgart

Stuttgart was very well presented in Turin, as usual, but as this was the first time for me to be at a conference with my Bamberg affiliation, I will focus on mentioning the contributions that came from Bamberg.

We had two papers in which my group was involved:

- Velutharambath, Wührl, and Klinger (2024) presents our Defabel corpus in which we asked people to argue for a given statement (“Convince me that camels store water in the hump.”) Depending on their own belief, we labeled the argument as deceptive or not. By doing so, we have a corpus in which deceptive arguments and “honest” arguments were created for the same statements. Our intend was to disentangle fact-checking and deception detection.

- Wemmer, Labat, and Klinger (2024) describes the corpus creation of customer agent and dream corpora annotated with cumulative emotion labels. Most emotion corpora are either for the whole text, for isolated sentences, or for sentences in context. We compiled a corpus with annotations in which the raters only had access to the prior context, which is the realistic setting how we also read text or talk to other people – we cannot look into the future!

I was happy to also see another contribution from Bamberg, namely from the group of Andreas Henrich:

- Fruth, Jegan, and Henrich (2024) discuss in their paper published at the “Workshop on DeTermIt! Evaluating Text Difficulty in a Multilingual Context” a reinforcement learning based approach to German text simplification. Noteworthy is that they also tackle hallucination to some degree, namely by checking of any named entities are included that have not been in the original non-simplified text.

My Favorite Contributions

I found quite a set of talks and papers very interesting. This only reflects my personal opinion, and that I do not mention a particular paper probably only means that I did not have the time to see its presentation. There are many interesting papers in the proceedings, I did not go through all of them yet.

Invited Talks

Before I mention my favorite papers, I’d like to say something about the invited talks. There were three of them with quite different foci. I’ll mention the two here that I found most interesting.

Roger Levy talked about mistakes that humans and language models do; in the same way or in different ways. He also gave possible explanations for both humans and models. His talk was full of interesting text completion examples, for instance “The children went outside to…” or “The squirrel stored some nuts in the…” – where in the latter case apparently many people answer “tree”.

Michele Loporcaro talked about differences in the dialect in italy. I found this inspiring, not only because there was barely anything in the talk that I knew before (not a linguist…), but also because it gave me an interesting example for linguistic research to which I am not often enough exposed yet.

Papers

I found the following papers particularly interesting. I selected these papers based on my own interests. Given that you read this here on my blog there is some chance that you share some research interests with me and hopefully find my selection useful. Still, I want to point out that it’s absolutely not a negative opinion statement if I did not include a paper here, despite it being related to my interests. I probably just missed it.

Biomedical and Health NLP:

- Raithel et al. (2024) create a pharmacovigilance corpus across multiple languages. It is annotated with drug names, changes in medication, and side effects, as well as causal relations. Interestingly, the baseline experiments also include cross-lingual experiments (training on multiple languages and testing in a zero-shot setting on another one). The performance scores are similar to monolingual experiments, sometimes even higher. The paper might have some overlap to our BEAR corpus, but we had a different focus, namely the goal to develop an entity and argumentative claim resource (Wührl and Klinger (2022),Wuehrl et al. (2024)).

- Giannouris et al. (2024) describe an approach in the Determit workshop to automatically summarize clinical trial reports in plain language. I’ve been interested in biomedical text summarization for laypeople for a while, and our FIBISS project has also been motivated with such challenges in mind. They contribute an interesting and valuable resource on the topic.

Ensembles/Aggregations of annotators:

- Basile, Franco-Salvador, and Rosso (2024) is a bit of a demo paper. They present a Bayesian approach to annotator aggregation with an integration of the STAN language to specify directed probabilistic models. First of all, I was quite happy to see some probabilistic graphical model work at the conference, and secondly, this really looks like a useful approach. We’ll definitely have a look!

- Flor Miriam Plaza del Arco presented work in the NLPerspectives workshop in which she and her colleagues showed that the annotation-aggregation method MACE can be used to build ensembles of language models which are better than simpler aggregation methods, like majority vote (Plaza-del-Arco, Nozza, and Hovy (2024)). That’s interesting because LLMs are not really diverse as humans are, but the method still works for aggregation Instruction-tuned LMs show specialization in different tasks. We talked potential future work in which the components of the ensemble could be conditioned on personas to explicitly make the ensemble more diverse as humans are in annotation tasks. I am curious to see how this goes!

Corpus collection and Analysis:

- Jin et al. (2024) report on their study on bragging in social media. They find that rich males brag more about their leisure time while low income people focus more on the self. Very interesting analysis. I am wondering if these results could impact general social media happiness analysis.

- Dick et al. (2024) describe their collected corpus of various ways to formulate in a gender-inclusive manner in German. They include comparably known cases like “Arbeiter:innen”, but also nominalized particles (Lehrende) and abstract nouns (Lehrkraefte). They collected these latter cases pretty much manually if I understood their work correctly. I think that their corpus would be an interesting resource to build an automatic system that can find unknown and rare cases of such inclusive language. I sometimes feel a bit challenged to always formulate gender-inclusive, and I’d like to learn from other people how they do that in rare, less established cases than “Studierende”.

- Fiorentini, Forlano, and Nese (2024) create a corpus of italian WhatsApp messages. An interesing approach: The authors collected their own whatsapp messages, including voice messages and asked the interlocutors for consent. The resources seems not to be available yet, but I am super curious. I remember that there has been a paper on trust in social media platforms some while ago and this resource might be an interesting opportunity to study such effects computationally (Nemec Zlatolas et al. (2019)).

- Troiano and Vossen (2024) created the CLAUSE-ATLAS corpus. They aim at a full (clause-level) annotation of events and emotions in some books. This can of course not be done with reasonable costs manually. They therefore only annotate beginnings of chapter manually and the rest automatically and analyze the agreement between human annotators and a large language model. They find that the agreement is comparable.

- Maladry et al. (2024) build, to best of my knowledge, the first irony-labeled corpus in which annotators were asked for their confidence that the text is actually ironic. They formulated the labels as a rating scale. Interestingly, automatic systems are better with predicting irony on the instances in which humans were confident. That result is in line with our findings for emotion analysis a couple of years ago (Troiano, Padó, and Klinger (2021)), where we also showed that humans can quite well predict the inter-annotator agreement for the instances they annotated.

Arguments and News:

- Feger and Dietze (2024) build an argument corpus in which the discussion is kept as a tree. They label Arguments as Statements and Reasons and Non-arguments as Notification or None. I think it might be nice to also see persuasiveness labels for the arguments in comparison to each other in each tree.

- Song and Wang (2024) build an automatic system to persuade people, here in a specific context, namely to make donations. Their system is a chatbot that can automatically recognize which persuasion strategy might be most promising. They consider “credibility appeal”, “foot-in-the-door”, and “emotional appeal”. Again, that’s super relevant for our EMCONA project and we’ll consider to use this for our work.

- Pu et al. (2024) built a system to automatically generate news reports out of scientific texts. Their idea is similar to our analysis in our recent work in Wuehrl et al. (2024). Its impressive that they were able to automatize such complex task! I’d be curious to understand if their automatic system makes the same changes to the text and scientific claims that we found (making the articles more sensational, or simplifying correlation reports to causations).

- Nejadgholi et al. (2024) create counter-stereotype statements. I put this in this category of argument mining, because I’d consider counter-stereotype statements as attempts to convince the dialogue partner to change a stance. The work is nicely grounded in categories of stereotypes, like counter-facts and broadening universals. I am also here wondering if a convincingness study would make sense.

- Kalashnikova, Vasilescu, and Devillers (2024) describe a wizard-of-oz study in which they nudge people carefully to change their opinion or emotion. They compare smart assistance, robots, and humans and … human nudges are most successful.

LLM-Specific things:

- Rao et al. (2024) develop a hierarchy of jailbreak attempts to LLMs. I did not too much look into possibilities to trick LLM to do things they are supposed not to do (like to leak training data), and the authors provide a set of possible approaches. It is interesting to see these weaknesses of existing models.

- Addlesee, Lemon, and Eshghi (2024) describe a study that showcases possible differences how LLM answer requests to how humans would do that. Particularly, they put incomplete questions into a LLM and check if the behaviour is human-like. My favorite example from the poster was: “What is the zip code of…” and the LLM answers “of Nevada?”.

- Pucci, Ranaldi, and Freitas (2024) report on an experiment on the importance of the order of instructions with varying difficulty. Instead of just using answer length or such proxies to assess the difficulty, they rely on the concept of Bloom’s taxonomy (remembering, understanding, applying, creating/evaluating/analyzing) and show that fine-tuning a LLM in order of these categories in increasing difficulty level leads to better results. This paper is a beautiful example that imports knowledge from psychology and humanities into machine learning.

Emotions:

- Li, Peng, and Hsu (2024) describe a chat system that can help people to regulate emotions. This is the first work I am aware of that builds on top of emotion regulation theories. Their system learns that guilt can be tackled with curiosity, and fear with admiration. Very impressive example of how an analysis of a data-driven system confirms knowledge that we have from other fields, confirming the learning approach.

- Bhaumik et al. (2024) describe a corpus and modeling effort of detecting agendas on social media. What do people intend with a particular post? I find this related to our research interest in the EMCONA project, in which we want to understand how people use emotions to persuade people. However, their work is more general and focuses on agendas that are less explicitly formulated in the annotated task.

- Christop (2024) described her effort on building a speech corpus in Polish, labeled with emotions. Her intend is to use it later for text-to-speech systems. The data has been created by asking actors to show specific given emotional states.

- Plaza-del-Arco et al. (2024) nicely complements my recent paper on the current state of research on event-centric emotion analysis (Klinger (2023)). The coverage by Flor and her colleagues is much broader than mine, and they particularly point out the subjective nature of emotions needs to be considered more. Further, there is quite a large set of emotion models in psychology that has not been considered yet.

- Prochnow et al. (2024) desribe an (automatically generated) data set of idioms with emotion labels.

- Cortal (2024) reports on an emotion labeled corpus of dreams. Interestingly, emotions in this dreambank corpus are mostly expressed quite explicitly. The corpus also contains some semantic role annotations, making it one of the few corpora with structured emotion annotations. We also worked on this for a while, with the REMAN and GoodNewsEveryone corpora (Kim and Klinger (2018),Bostan, Kim, and Klinger (2020)), amongst others. It might be interesting to see how literature and news annotations compare to those in dreams, and if emotion role labeling systems could be transferred between these very different domains.

Other things:

- Arimoto et al. (2024) develop a long-term chat system to study how a perception of intimacy between the human and the chat agent develops.

- Jon and Bojar (2024) use an optimization method to find translations that show a high evaluation score, but are wrong.

Awards

The Best papers of LREC-COLING are:

- Bafna et al. (2024): “When Your Cousin Has the Right Connections: Unsupervised Bilingual Lexicon Induction for Related Data-Imbalanced Languages”

- Someya, Yoshida, and Oseki (2024): “Targeted Syntactic Evaluation on the Chomsky Hierarchy”

Venue and Place

The conference took place in Turin, a nice city which is not too touristic. It has an acceptable cycling infrastructure which I used to go from downtown to the conference center every day. The cars seem not to be used to bicycles yet and did not check at all if there is a bike when they turned into an intersection, but the infrastructure was preventing bad incidence. Definitely not a perfect infrastructure, but much better than in Stuttgart, so I enjoyed cycling in Turin a lot.

The conference center was the old Fiat factory Lingotto which now has, next to the conference center, also a mall and a car museum. I am not a car fan, but the test track on the roof was pretty impressive.

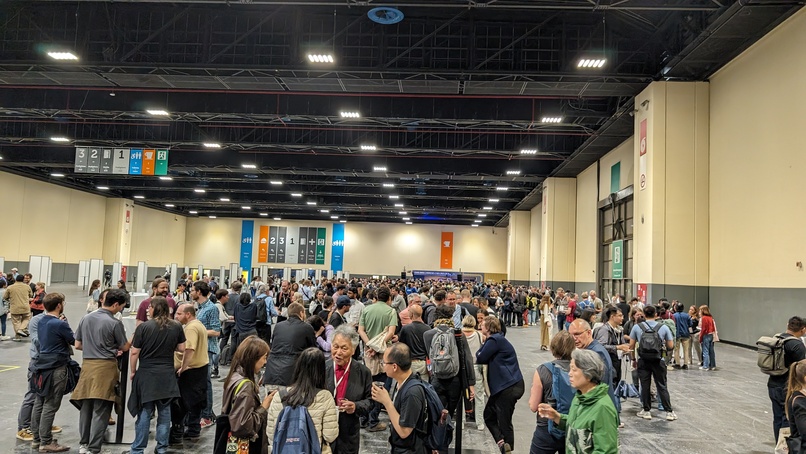

The conference center itself was pretty nice (and huge!). The poster sessions were in a separate hall with a lot of space. While the venue has not been as charming as in Iceland (LREC 2014), Marrakesh (LREC 2008), or Santa Fe (COLING 2018), I enjoyed the venue being close to the city.

Altogether, I was very happy with the whole conference, and I am looking forward to the next LREC 2025 and the COLING 2026.

Bibliography

Addlesee, Angus, Oliver Lemon, and Arash Eshghi. 2024. “Clarifying Completions: Evaluating How LLMs Respond to Incomplete Questions.” In Proceedings of the 2024 Joint International Conference on Computational Linguistics, Language Resources and Evaluation (LREC-COLING 2024), edited by Nicoletta Calzolari, Min-Yen Kan, Veronique Hoste, Alessandro Lenci, Sakriani Sakti, and Nianwen Xue, 3242–49. Torino, Italia: ELRA; ICCL. https://aclanthology.org/2024.lrec-main.288.

Arimoto, Tsunehiro, Hiroaki Sugiyama, Hiromi Narimatsu, and Masahiro Mizukami. 2024. “Comparison of the Intimacy Process Between Real and Acting-Based Long-Term Text Chats.” In Proceedings of the 2024 Joint International Conference on Computational Linguistics, Language Resources and Evaluation (LREC-COLING 2024), edited by Nicoletta Calzolari, Min-Yen Kan, Veronique Hoste, Alessandro Lenci, Sakriani Sakti, and Nianwen Xue, 3639–44. Torino, Italia: ELRA; ICCL. https://aclanthology.org/2024.lrec-main.322.

Bafna, Niyati, Cristina España-Bonet, Josef van Genabith, Benoı̂t Sagot, and Rachel Bawden. 2024. “When Your Cousin Has the Right Connections: Unsupervised Bilingual Lexicon Induction for Related Data-Imbalanced Languages.” In Proceedings of the 2024 Joint International Conference on Computational Linguistics, Language Resources and Evaluation (LREC-COLING 2024), edited by Nicoletta Calzolari, Min-Yen Kan, Veronique Hoste, Alessandro Lenci, Sakriani Sakti, and Nianwen Xue, 17544–56. Torino, Italia: ELRA; ICCL. https://aclanthology.org/2024.lrec-main.1526.

Basile, Angelo, Marc Franco-Salvador, and Paolo Rosso. 2024. “PyRater: A Python Toolkit for Annotation Analysis.” In Proceedings of the 2024 Joint International Conference on Computational Linguistics, Language Resources and Evaluation (LREC-COLING 2024), edited by Nicoletta Calzolari, Min-Yen Kan, Veronique Hoste, Alessandro Lenci, Sakriani Sakti, and Nianwen Xue, 13356–62. Torino, Italia: ELRA; ICCL. https://aclanthology.org/2024.lrec-main.1169.

Bhaumik, Ankita, Ning Sa, Gregorios Katsios, and Tomek Strzalkowski. 2024. “Social Convos: Capturing Agendas and Emotions on Social Media.” In Proceedings of the 2024 Joint International Conference on Computational Linguistics, Language Resources and Evaluation (LREC-COLING 2024), edited by Nicoletta Calzolari, Min-Yen Kan, Veronique Hoste, Alessandro Lenci, Sakriani Sakti, and Nianwen Xue, 14984–94. Torino, Italia: ELRA; ICCL. https://aclanthology.org/2024.lrec-main.1303.

Bostan, Laura Ana Maria, Evgeny Kim, and Roman Klinger. 2020. “GoodNewsEveryone: A Corpus of News Headlines Annotated with Emotions, Semantic Roles, and Reader Perception.” In Proceedings of the Twelfth Language Resources and Evaluation Conference, edited by Nicoletta Calzolari, Frédéric Béchet, Philippe Blache, Khalid Choukri, Christopher Cieri, Thierry Declerck, Sara Goggi, et al., 1554–66. Marseille, France: European Language Resources Association. https://aclanthology.org/2020.lrec-1.194.

Christop, Iwona. 2024. “NEMO: Dataset of Emotional Speech in Polish.” In Proceedings of the 2024 Joint International Conference on Computational Linguistics, Language Resources and Evaluation (LREC-COLING 2024), edited by Nicoletta Calzolari, Min-Yen Kan, Veronique Hoste, Alessandro Lenci, Sakriani Sakti, and Nianwen Xue, 12111–16. Torino, Italia: ELRA; ICCL. https://aclanthology.org/2024.lrec-main.1059.

Cortal, Gustave. 2024. “Sequence-to-Sequence Language Models for Character and Emotion Detection in Dream Narratives.” In Proceedings of the 2024 Joint International Conference on Computational Linguistics, Language Resources and Evaluation (LREC-COLING 2024), edited by Nicoletta Calzolari, Min-Yen Kan, Veronique Hoste, Alessandro Lenci, Sakriani Sakti, and Nianwen Xue, 14717–28. Torino, Italia: ELRA; ICCL. https://aclanthology.org/2024.lrec-main.1282.

Dick, Anna-Katharina, Matthias Drews, Valentin Pickard, and Victoria Pierz. 2024. “GIL-GALaD: Gender Inclusive Language - German Auto-Assembled Large Database.” In Proceedings of the 2024 Joint International Conference on Computational Linguistics, Language Resources and Evaluation (LREC-COLING 2024), edited by Nicoletta Calzolari, Min-Yen Kan, Veronique Hoste, Alessandro Lenci, Sakriani Sakti, and Nianwen Xue, 7740–45. Torino, Italia: ELRA; ICCL. https://aclanthology.org/2024.lrec-main.684.

Feger, Marc, and Stefan Dietze. 2024. “TACO – Twitter Arguments from COnversations.” In Proceedings of the 2024 Joint International Conference on Computational Linguistics, Language Resources and Evaluation (LREC-COLING 2024), edited by Nicoletta Calzolari, Min-Yen Kan, Veronique Hoste, Alessandro Lenci, Sakriani Sakti, and Nianwen Xue, 15522–29. Torino, Italia: ELRA; ICCL. https://aclanthology.org/2024.lrec-main.1349.

Fiorentini, Ilaria, Marco Forlano, and Nicholas Nese. 2024. “Towards the WhAP Corpus: A Resource for the Study of Italian on WhatsApp.” In Proceedings of the 2024 Joint International Conference on Computational Linguistics, Language Resources and Evaluation (LREC-COLING 2024), edited by Nicoletta Calzolari, Min-Yen Kan, Veronique Hoste, Alessandro Lenci, Sakriani Sakti, and Nianwen Xue, 16659–63. Torino, Italia: ELRA; ICCL. https://aclanthology.org/2024.lrec-main.1448.

Fruth, Leon, Robin Jegan, and Andreas Henrich. 2024. “An Approach Towards Unsupervised Text Simplification on Paragraph-Level for German Texts.” In Proceedings of the Workshop on DeTermIt! Evaluating Text Difficulty in a Multilingual Context @ LREC-COLING 2024, edited by Giorgio Maria Di Nunzio, Federica Vezzani, Liana Ermakova, Hosein Azarbonyad, and Jaap Kamps, 77–89. Torino, Italia: ELRA; ICCL. https://aclanthology.org/2024.determit-1.8.

Giannouris, Polydoros, Theodoros Myridis, Tatiana Passali, and Grigorios Tsoumakas. 2024. “Plain Language Summarization of Clinical Trials.” In Proceedings of the Workshop on DeTermIt! Evaluating Text Difficulty in a Multilingual Context @ LREC-COLING 2024, edited by Giorgio Maria Di Nunzio, Federica Vezzani, Liana Ermakova, Hosein Azarbonyad, and Jaap Kamps, 60–67. Torino, Italia: ELRA; ICCL. https://aclanthology.org/2024.determit-1.6.

Jin, Mali, Daniel Preotiuc-Pietro, A. Seza Doğruöz, and Nikolaos Aletras. 2024. “Who Is Bragging More Online? A Large Scale Analysis of Bragging in Social Media.” In Proceedings of the 2024 Joint International Conference on Computational Linguistics, Language Resources and Evaluation (LREC-COLING 2024), edited by Nicoletta Calzolari, Min-Yen Kan, Veronique Hoste, Alessandro Lenci, Sakriani Sakti, and Nianwen Xue, 17575–87. Torino, Italia: ELRA; ICCL. https://aclanthology.org/2024.lrec-main.1529.

Jon, Josef, and Ondřej Bojar. 2024. “GAATME: A Genetic Algorithm for Adversarial Translation Metrics Evaluation.” In Proceedings of the 2024 Joint International Conference on Computational Linguistics, Language Resources and Evaluation (LREC-COLING 2024), edited by Nicoletta Calzolari, Min-Yen Kan, Veronique Hoste, Alessandro Lenci, Sakriani Sakti, and Nianwen Xue, 7562–69. Torino, Italia: ELRA; ICCL. https://aclanthology.org/2024.lrec-main.668.

Kalashnikova, Natalia, Ioana Vasilescu, and Laurence Devillers. 2024. “Linguistic Nudges and Verbal Interaction with Robots, Smart-Speakers, and Humans.” In Proceedings of the 2024 Joint International Conference on Computational Linguistics, Language Resources and Evaluation (LREC-COLING 2024), edited by Nicoletta Calzolari, Min-Yen Kan, Veronique Hoste, Alessandro Lenci, Sakriani Sakti, and Nianwen Xue, 10555–64. Torino, Italia: ELRA; ICCL. https://aclanthology.org/2024.lrec-main.923.

Kim, Evgeny, and Roman Klinger. 2018. “Who Feels What and Why? Annotation of a Literature Corpus with Semantic Roles of Emotions.” In Proceedings of the 27th International Conference on Computational Linguistics, edited by Emily M. Bender, Leon Derczynski, and Pierre Isabelle, 1345–59. Santa Fe, New Mexico, USA: Association for Computational Linguistics. https://aclanthology.org/C18-1114.

Klinger, Roman. 2023. “Where Are We in Event-Centric Emotion Analysis? Bridging Emotion Role Labeling and Appraisal-Based Approaches.” In Proceedings of the Big Picture Workshop, edited by Yanai Elazar, Allyson Ettinger, Nora Kassner, Sebastian Ruder, and Noah A. Smith, 1–17. Singapore: Association for Computational Linguistics. https://doi.org/10.18653/v1/2023.bigpicture-1.1.

Klinger, Roman, Naozaki Okazaki, Nicoletta Calzolari, and Min-Yen Kan, eds. 2024. Proceedings of the 2024 Joint International Conference on Computational Linguistics, Language Resources and Evaluation (LREC-COLING 2024): Tutorial Summaries. Torino, Italia: ELRA; ICCL. https://aclanthology.org/2024.lrec-tutorials.0.

Li, Junlin, Bo Peng, and Yu-Yin Hsu. 2024. “Emstremo: Adapting Emotional Support Response with Enhanced Emotion-Strategy Integrated Selection.” In Proceedings of the 2024 Joint International Conference on Computational Linguistics, Language Resources and Evaluation (LREC-COLING 2024), edited by Nicoletta Calzolari, Min-Yen Kan, Veronique Hoste, Alessandro Lenci, Sakriani Sakti, and Nianwen Xue, 5794–5805. Torino, Italia: ELRA; ICCL. https://aclanthology.org/2024.lrec-main.514.

Maladry, Aaron, Alessandra Teresa Cignarella, Els Lefever, Cynthia van Hee, and Veronique Hoste. 2024. “Human and System Perspectives on the Expression of Irony: An Analysis of Likelihood Labels and Rationales.” In Proceedings of the 2024 Joint International Conference on Computational Linguistics, Language Resources and Evaluation (LREC-COLING 2024), edited by Nicoletta Calzolari, Min-Yen Kan, Veronique Hoste, Alessandro Lenci, Sakriani Sakti, and Nianwen Xue, 8372–82. Torino, Italia: ELRA; ICCL. https://aclanthology.org/2024.lrec-main.734.

Nejadgholi, Isar, Kathleen C. Fraser, Anna Kerkhof, and Svetlana Kiritchenko. 2024. “Challenging Negative Gender Stereotypes: A Study on the Effectiveness of Automated Counter-Stereotypes.” In Proceedings of the 2024 Joint International Conference on Computational Linguistics, Language Resources and Evaluation (LREC-COLING 2024), edited by Nicoletta Calzolari, Min-Yen Kan, Veronique Hoste, Alessandro Lenci, Sakriani Sakti, and Nianwen Xue, 3005–15. Torino, Italia: ELRA; ICCL. https://aclanthology.org/2024.lrec-main.268.

Nemec Zlatolas, L., T. Welzer, M. lbl, M. ko, and A. ć. 2019. “A Model of Perception of Privacy, Trust, and Self-Disclosure on Online Social Networks.” Entropy (Basel) 21 (8).

Plaza-del-Arco, Flor Miriam, Alba A. Cercas Curry, Amanda Cercas Curry, and Dirk Hovy. 2024. “Emotion Analysis in NLP: Trends, Gaps and Roadmap for Future Directions.” In Proceedings of the 2024 Joint International Conference on Computational Linguistics, Language Resources and Evaluation (LREC-COLING 2024), edited by Nicoletta Calzolari, Min-Yen Kan, Veronique Hoste, Alessandro Lenci, Sakriani Sakti, and Nianwen Xue, 5696–5710. Torino, Italia: ELRA; ICCL. https://aclanthology.org/2024.lrec-main.506.

Plaza-del-Arco, Flor Miriam, Debora Nozza, and Dirk Hovy. 2024. “Wisdom of Instruction-Tuned Language Model Crowds. Exploring Model Label Variation.” In Proceedings of the 3rd Workshop on Perspectivist Approaches to NLP (NLPerspectives) @ LREC-COLING 2024, edited by Gavin Abercrombie, Valerio Basile, Davide Bernadi, Shiran Dudy, Simona Frenda, Lucy Havens, and Sara Tonelli, 19–30. Torino, Italia: ELRA; ICCL. https://aclanthology.org/2024.nlperspectives-1.2.

Prochnow, Alexander, Johannes E. Bendler, Caroline Lange, Foivos Ioannis Tzavellos, Bas Marco Göritzer, Marijn ten Thij, and Riza Batista-Navarro. 2024. “IDEM: The IDioms with EMotions Dataset for Emotion Recognition.” In Proceedings of the 2024 Joint International Conference on Computational Linguistics, Language Resources and Evaluation (LREC-COLING 2024), edited by Nicoletta Calzolari, Min-Yen Kan, Veronique Hoste, Alessandro Lenci, Sakriani Sakti, and Nianwen Xue, 8569–79. Torino, Italia: ELRA; ICCL. https://aclanthology.org/2024.lrec-main.752.

Pu, Dongqi, Yifan Wang, Jia E. Loy, and Vera Demberg. 2024. “SciNews: From Scholarly Complexities to Public Narratives – a Dataset for Scientific News Report Generation.” In Proceedings of the 2024 Joint International Conference on Computational Linguistics, Language Resources and Evaluation (LREC-COLING 2024), edited by Nicoletta Calzolari, Min-Yen Kan, Veronique Hoste, Alessandro Lenci, Sakriani Sakti, and Nianwen Xue, 14429–44. Torino, Italia: ELRA; ICCL. https://aclanthology.org/2024.lrec-main.1258.

Pucci, Giulia, Leonardo Ranaldi, and Andres Freitas. 2024. “Does the Order Matter? Curriculum Learning over Languages.” In Proceedings of the 2024 Joint International Conference on Computational Linguistics, Language Resources and Evaluation (LREC-COLING 2024), edited by Nicoletta Calzolari, Min-Yen Kan, Veronique Hoste, Alessandro Lenci, Sakriani Sakti, and Nianwen Xue, 5212–20. Torino, Italia: ELRA; ICCL. https://aclanthology.org/2024.lrec-main.464.

Raithel, Lisa, Hui-Syuan Yeh, Shuntaro Yada, Cyril Grouin, Thomas Lavergne, Aurélie Névéol, Patrick Paroubek, et al. 2024. “A Dataset for Pharmacovigilance in German, French, and Japanese: Annotating Adverse Drug Reactions Across Languages.” In Proceedings of the 2024 Joint International Conference on Computational Linguistics, Language Resources and Evaluation (LREC-COLING 2024), edited by Nicoletta Calzolari, Min-Yen Kan, Veronique Hoste, Alessandro Lenci, Sakriani Sakti, and Nianwen Xue, 395–414. Torino, Italia: ELRA; ICCL. https://aclanthology.org/2024.lrec-main.36.

Rao, Abhinav Sukumar, Atharva Roshan Naik, Sachin Vashistha, Somak Aditya, and Monojit Choudhury. 2024. “Tricking LLMs into Disobedience: Formalizing, Analyzing, and Detecting Jailbreaks.” In Proceedings of the 2024 Joint International Conference on Computational Linguistics, Language Resources and Evaluation (LREC-COLING 2024), edited by Nicoletta Calzolari, Min-Yen Kan, Veronique Hoste, Alessandro Lenci, Sakriani Sakti, and Nianwen Xue, 16802–30. Torino, Italia: ELRA; ICCL. https://aclanthology.org/2024.lrec-main.1462.

Someya, Taiga, Ryo Yoshida, and Yohei Oseki. 2024. “Targeted Syntactic Evaluation on the Chomsky Hierarchy.” In Proceedings of the 2024 Joint International Conference on Computational Linguistics, Language Resources and Evaluation (LREC-COLING 2024), edited by Nicoletta Calzolari, Min-Yen Kan, Veronique Hoste, Alessandro Lenci, Sakriani Sakti, and Nianwen Xue, 15595–605. Torino, Italia: ELRA; ICCL. https://aclanthology.org/2024.lrec-main.1356.

Song, Yuhan, and Houfeng Wang. 2024. “Would You Like to Make a Donation? A Dialogue System to Persuade You to Donate.” In Proceedings of the 2024 Joint International Conference on Computational Linguistics, Language Resources and Evaluation (LREC-COLING 2024), edited by Nicoletta Calzolari, Min-Yen Kan, Veronique Hoste, Alessandro Lenci, Sakriani Sakti, and Nianwen Xue, 17707–17. Torino, Italia: ELRA; ICCL. https://aclanthology.org/2024.lrec-main.1540.

Troiano, Enrica, Sebastian Padó, and Roman Klinger. 2021. “Emotion Ratings: How Intensity, Annotation Confidence and Agreements Are Entangled.” In Proceedings of the Eleventh Workshop on Computational Approaches to Subjectivity, Sentiment and Social Media Analysis, edited by Orphee De Clercq, Alexandra Balahur, Joao Sedoc, Valentin Barriere, Shabnam Tafreshi, Sven Buechel, and Veronique Hoste, 40–49. Online: Association for Computational Linguistics. https://aclanthology.org/2021.wassa-1.5.

Troiano, Enrica, and Piek T. J. M. Vossen. 2024. “CLAUSE-ATLAS: A Corpus of Narrative Information to Scale up Computational Literary Analysis.” In Proceedings of the 2024 Joint International Conference on Computational Linguistics, Language Resources and Evaluation (LREC-COLING 2024), edited by Nicoletta Calzolari, Min-Yen Kan, Veronique Hoste, Alessandro Lenci, Sakriani Sakti, and Nianwen Xue, 3283–96. Torino, Italia: ELRA; ICCL. https://aclanthology.org/2024.lrec-main.292.

Velutharambath, Aswathy, Amelie Wührl, and Roman Klinger. 2024. “Can Factual Statements Be Deceptive? The DeFaBel Corpus of Belief-Based Deception.” In Proceedings of the 2024 Joint International Conference on Computational Linguistics, Language Resources and Evaluation (LREC-COLING 2024), edited by Nicoletta Calzolari, Min-Yen Kan, Veronique Hoste, Alessandro Lenci, Sakriani Sakti, and Nianwen Xue, 2708–23. Torino, Italia: ELRA; ICCL. https://aclanthology.org/2024.lrec-main.243.

Wemmer, Eileen, Sofie Labat, and Roman Klinger. 2024. “EmoProgress: Cumulated Emotion Progression Analysis in Dreams and Customer Service Dialogues.” In Proceedings of the 2024 Joint International Conference on Computational Linguistics, Language Resources and Evaluation (LREC-COLING 2024), edited by Nicoletta Calzolari, Min-Yen Kan, Veronique Hoste, Alessandro Lenci, Sakriani Sakti, and Nianwen Xue, 5660–77. Torino, Italia: ELRA; ICCL. https://aclanthology.org/2024.lrec-main.503.

Wuehrl, Amelie, Yarik Menchaca Resendiz, Lara Grimminger, and Roman Klinger. 2024. “What Makes Medical Claims (Un)verifiable? Analyzing Entity and Relation Properties for Fact Verification.” In Proceedings of the 18th Conference of the European Chapter of the Association for Computational Linguistics (Volume 1: Long Papers), edited by Yvette Graham and Matthew Purver, 2046–58. St. Julian’s, Malta: Association for Computational Linguistics. https://aclanthology.org/2024.eacl-long.124.

Wührl, Amelie, and Roman Klinger. 2022. “Recovering Patient Journeys: A Corpus of Biomedical Entities and Relations on Twitter (BEAR).” In Proceedings of the Thirteenth Language Resources and Evaluation Conference, edited by Nicoletta Calzolari, Frédéric Béchet, Philippe Blache, Khalid Choukri, Christopher Cieri, Thierry Declerck, Sara Goggi, et al., 4439–50. Marseille, France: European Language Resources Association. https://aclanthology.org/2022.lrec-1.472.